What is Add & Norm, as quick as possible?

This article is inspired by — Attention is all you need paper and its explanation by Umar Jamil.

Ever wondered what does “Add & Norm” layer in transformer entails? Why do we use it? Or What is its use in the Transformer Architecture? If yes, then this article is for you.

The Add & Norm layer, as the name suggests, consists of two parts: add and norm. It is also known as Residual Connection followed by Layer Normalization.

Why do we use it?

To stabilize and improve the training of deep neural networks.

How does it works?

As mentioned previously, residual connection!

Part 1: Residual Connection (Add)

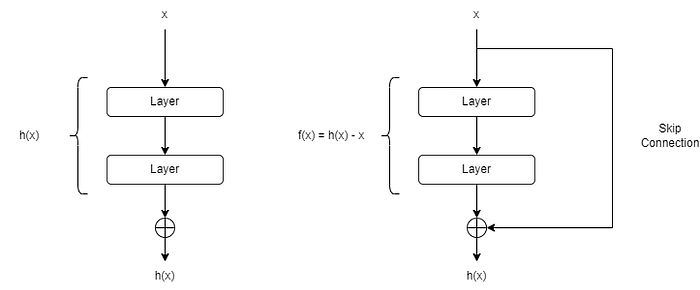

Residual connection, also known as skip connection, is a technique used in deep neural networks to facilitate the training of very deep architectures. This technique was introduced in ResNet (Residual Networks) by Kaiming He et al. in 2015 [1]. This network was based on a concept called as Residual Learning. The main idea of this concept is to allow the input to a layer to bypass the layer’s operations and be added directly to the layer’s output.

The layers operation can be anything like Linear Transformations, Non-Linear Transformations, Normalization, Dropout, Pooling, etc.

Now to understand how residual connection works, let’s look into an example:

If you design a multi-layered neural network and it’s underlying function is h(x) where x is the input to this subnetwork. Your goal is to make it model the target function h(x).

Residual learning re-parameterizes this subnetwork, and lets the parameter layers represent a “residual function.” In other words, instead of approximating the underlying function h(x), the model will be forced to approximate a residual function f(x).

Ideally, y = h(x). After residual connection, y = h(x) + x

So, instead of estimating h(x), we estimate f(x) = h(x) — x

The operation of adding the input (+x) is done using “skip connection,” as shown below in the figure 2&3.

Advantage of Residual Learning in Transformers is to facilitate signal propagation in both backward and forward paths which solves the problem of vanishing gradients. Complex networks with deeper layers like transformer suffers from vanishing gradient problems and skip connection helps restore the diminishing gradients by performing the identity mapping. To understand this mathematically, let’s see how it is done mathematically.

Forward propagation

From the above figure, we now know that y = f(x) + x, this y can be an input to another set of layer in the network but to keep it as simple as possible we will not assume that for now.

So for now our general relationship for FP will be:

y = f(x) + x

Backward propagation

This is where the residual connection addresses the vanishing gradient problem. To compute the gradients we will consider the partial derivate of a loss function ε with respect to the input x. Using the formula above our back propagation equation will be:

With this equation, even if ∂f(x) gradient happens to diminish the overall gradient will not vanish due to an extra ∂ε/∂y.

Part 2: Layer Normalization (Norm)

Layer normalization normalizes the output of the previous operation across the features.

It is very important to note that the function normalizes the output across the “features.”

During the layer normalization each item in the batch is normalized between the range of 0–1. These item can be anything, in our case of transformers it can be a vector embeddings of 512 dimensions of a word. We compute mean and variance of these items and replace each value in the matrix by,

After understanding this 2 questions came to my mind:

1. Why do we perform normalization?

2. Why not batch normalization?

Question 1: Why do we perform normalization?

Answer: Covariate shift

“Internal Covariate Shift is the change in the distribution of network activations due to the change in network parameters during training” — Ioffe & Szegedy [3].

During the training process in the transformer, the outputs produced by neurons in a layer after applying activation function to the weighted sum of inputs is called as activations. The distribution of these activations changes over time due to changes in network parameters.

Normalization mitigate this shift by maintaining stable distribution of activations, which makes the training process smooth!

Question 2: Why not batch normalization?

Answer: Due to varying length of sentences.

When dealing with sequential data, batch normalization is a not usually recommended because of variable length of the sentences. Batch normalization is applied across a mini-batch. If sequences have a varying length this may require padding or truncation which may introduce noise in the data.

For example lets consider following sentences [4]:

1. I am a boy

2. I love swimming

3. I am a graduate student from a pristine university and currently majoring Data Science.

These sentences will be converted into:

- I am a boy [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD]

- I love swimming [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD] [PAD]

- I am a graduate student from a pristine university and currently majoring Data Science.

As we can see that the padding tokens are dominating in some sentences, which will result in skewed statistics. This padding issue affects the computation of mean and variances across the mini-batch and thereby introduces noise into the normalization process.

Shortcomings of Layer Normalization in Transformers

While layer normalization provides several advantages, it also has some limitations. One of the primary shortcomings is that by normalizing the data within the scale of 0–1, the model can become too restrictive. This limitation can impede the model’s ability to learn effectively.

To mitigate this issue, the original Transformer paper introduces two learnable parameters:

- Gamma (Multiplicative)

- Beta (Additive)

These parameters are learnable, meaning the model adjusts them during training. Gamma and Beta allow the model to determine when to amplify or diminish certain values, thus introducing necessary fluctuations in the data.

Why are these parameters important?

- Gamma (γ): This parameter scales the normalized output. By learning the appropriate scaling factor, the model can adjust the normalized values to a range that is more suitable for the task at hand.

- Beta (β): This parameter shifts the normalized output. It allows the model to add a certain value to the normalized output, providing the flexibility to adjust the mean of the data.

After adding these two parameters the normalization equation will be:

By learning these parameters, the model avoids the restriction of having all values between 0–1. Instead, it introduces fluctuations when necessary, allowing for a more dynamic and adaptive learning process. This enhances the model’s ability to capture and represent complex patterns in the data.

Conclusion

In conclusion, the Add & Norm layer, which combines residual connections and normalization, plays a crucial role in Transformer models. The residual connection (Add) enhances gradient flow and optimizes learning by allowing gradients to pass through the network more effectively. Meanwhile, normalization helps stabilize the gradients, improving generalization and accelerating the training process. Together, these components enable the model to train deeper architectures more efficiently and effectively, addressing issues like vanishing gradients and restrictive data scales.

References

[1] Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun. Deep Residual Learning for Image Recognition. 2015.

[2] Hands-on Machine Learning with Scikit-Learn, Keras & Tensorflow 2nd Edition by Aurelien Geron

[3] Sergey Ioffe, Christian Szegedy. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

[4] Understanding Masking and Padding — https://www.tensorflow.org/guide/keras/understanding_masking_and_padding